For this post, we will utilize a pre-trained ResNet18 model from MxNet. These model architectures are available in most of the popular deep learning frameworks, and they can be further modified and extended depending on our project requirements. To translate an image to a vector, we can utilize a pre-trained model architecture, such as AlexNet, ResNet, VGG, or more recent ones, like ResNeXt and Vision Transformers. AWS Batch - Scalable computing environment powering our models as embarrassingly parallel tasks running as AWS Batch jobs.AWS Lambda - Serverless compute environment which will conduct some pre-processing and, ultimately, trigger the batch job execution and.Amazon DynamoDB - NoSQL database in which we will write the resulting vectors and other metadata.S3 - Amazon Simple Storage Service will act as our image source from which our batch jobs will read the image.Amazon ECR - Elastic Container Registry is a Docker image repository from which our batch instances will pull the job images.

In order to run our image vectorization task, we will utilize the following AWS cloud components: Our sample code, referenced in this post, will then read the resources from S3, conduct the vectorization, and write the results as entries in the DynamoDB Table.

#IMAGE VECTORIZER APP NOT WORKING CODE#

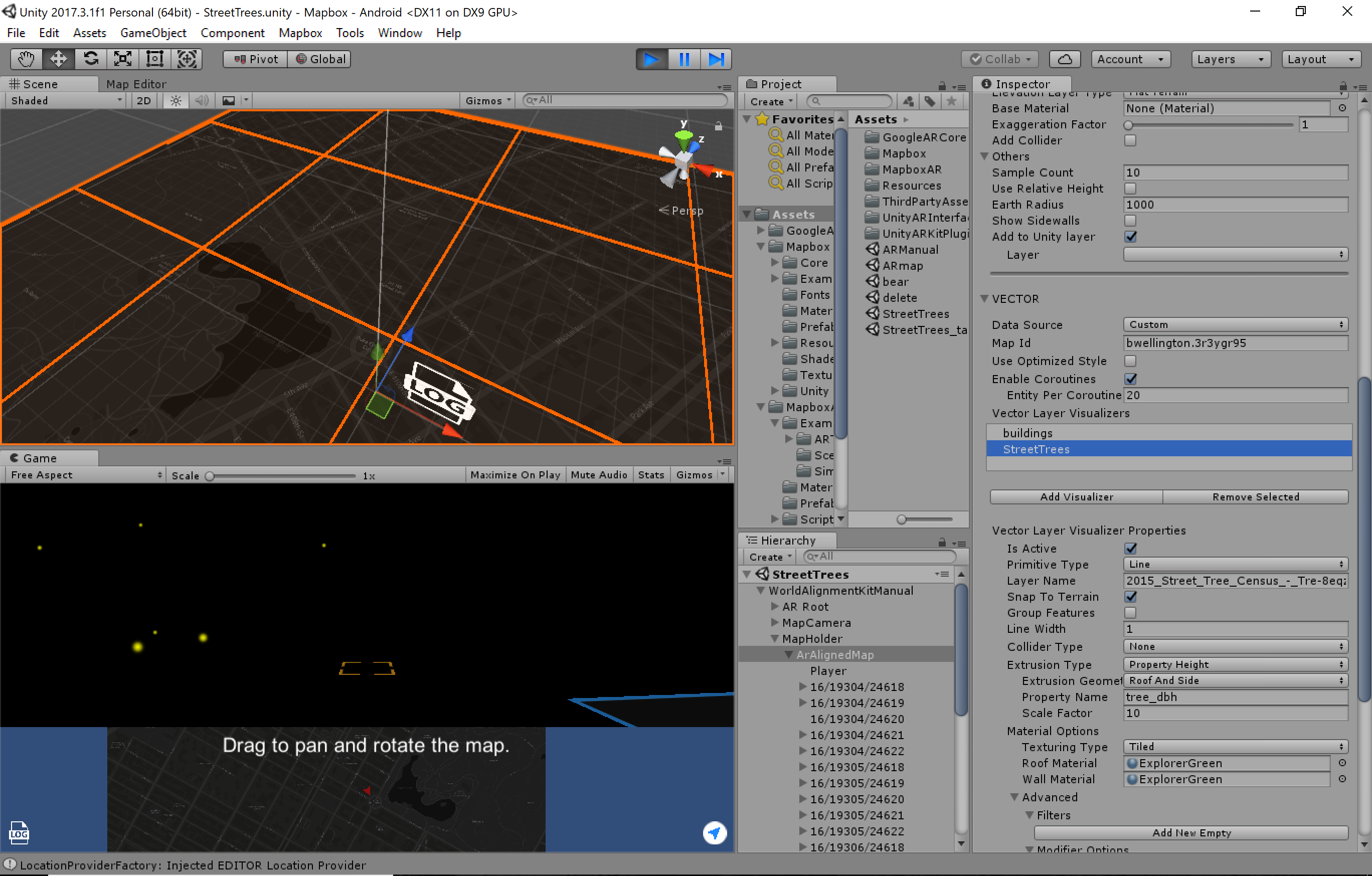

Architecture Overviewįigure 1: High-level architectural diagram explaining the major solution components.Īs seen in Figure 1, AWS Batch will pull the docker image containing our code onto provisioned hosts and start the docker containers. This post will demonstrate how we utilize the AWS Batch platform to solve a common task in many Computer Vision projects - calculating vector embeddings from a set of images so as to allow for scaling. For that reason, the common practice for deep learning approaches is to translate high-dimensional information representations, such as images, into vectors that encode most (if not all) information present in them - in other words, to create vector embeddings. Working with standard image files would be challenging, as they can vary in resolution or are otherwise too large in terms of dimensionality to be provided directly to our models. In Computer Vision, we often need to represent images in a more concise and uniform way. This is when we can utilize AWS Batch as our main computing environment, as well as Cloud Development Kit (CDK) to provision the necessary infrastructure in order to solve our task. As a Computer Vision research team at Amazon, we occasionally find that the amount of image data we are dealing with can’t be effectively computed on a single machine, but also isn’t large enough to justify running a large and potentially costly AWS Elastic Map Reduce (EMR) job.

Applying various transformations to images at scale is an easily parallelized and scaled task.

0 kommentar(er)

0 kommentar(er)